Hosted by Long and Short Reviews.

Click here to read everyone else’s replies to this week’s question and to read everyone else’s replies to this week’s question and here to see the full list of topics for the year.

If we’re talking about AI as in LLMs (large language models like ChatGPT), I am wholeheartedly opposed to the use of them for the following reasons:

If we’re talking about AI as in LLMs (large language models like ChatGPT), I am wholeheartedly opposed to the use of them for the following reasons:

The article linked to above is one of many examples of these chat bots telling users to do things that could end their lives in excruciating ways by saying that poisonous wild plants are safe to eat, venomous snakes are harmless, etc.

Most of us can generally notice at least some bad advice about a small to medium-sized number of topics right away, but few people will have deep enough knowledge or experience in every important subject to realize how terrible and even deadly some of the LLM responses are, especially if vulnerable people like a kid or someone who has an intellectual disability is asking.

2) Stealing other people’s work.

At best, the vast majority of these programs were trained on the artwork and writing of people who were not paid for their work, did not consent to it being used, and haven’t even been given something as simple as attribution for their ideas.

Just like you (hopefully!) wouldn’t sneak into your neighbour’s backyard to steal their dog, cat, tomatoes, flowers, children, or anything/anyone else you might find there even if you don’t think anyone will notice or care, nobody should be stealing other people’s creative works either.

A business model that depends on unpaid, non-consenting people to make it feasible should not exist. If they need a wide variety of photos or different types of writing to make an LLM work, pay people fairly and regularly for their contributions! This is a basic business expense that should come as a surprise to no one.

3) Academic and Business Cheating

From what I have read, there is a tsunami of students and workers who have switched to using LLMs to write anything from papers to exam answers to reports for their bosses. This means that they are not learning or reinforcing proper written communication skills and will become even less likely to notice mistakes in future work.

We need to encourage strong writing, critical thinking, and communication skills in people of all ages. Asking one of these models to do all of your work for you teaches little if anything useful and only makes life harder for those who use it in the long run because they won’t develop the skills they need in these areas.

4) Environmental Degradation

All of these queries are also wasting staggering amounts of water and electricity for frivolous purposes. I’ve read varying numbers as far as how much is being wasted and I don’t know which ones are correct, so I won’t quote specifics here.

Climate change is already wreaking havoc on the environment, and the last thing we should be doing now is making it worse.

I’d argue that the cost of the wasted water, electricity, and other materials should be the full and permanent responsibility of the LLM companies. They will either lose money or will have to dramatically raise the price of using them to account for all of the environmental damage they have done, are doing, and will do in the future.

5) Privacy concerns.

Do you really want LLMs to know so much private information about you? Who else is going to have access to your chats about your physical or mental health, occupation, relationships, sexual orientation, religious and political views, finances, possibly embarrassing family medical or legal history, and other possibly sensitive subjects to? If or when that information is leaked, whether purposefully or unintentionally, where will it go from there and how might it be used against you?

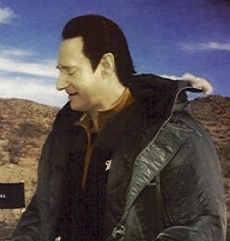

Image credit: Grcote at Wikipedia

On the other hand…

If we’re talking about Data from Star Trek, I’d welcome him to human society without hesitation.

He’s one of my favourite Star Trek: The Next Generation characters and I’d trust his judgment on nearly any subject because instead of simply guessing which word in a sentence should come next like LLMs do he was capable of deep, independent thought, had a wide breadth of knowledge, and was sentient.

If you asked him a question, he would either immediately understand it or ask for more details if necessary.

He would also never advise mixing glue into the cheese one puts on pizza to make it better like one LLM did a year or two ago because he was trustworthy and understood basic human physiology among many other subjects.

End rant! 😉